Hello stranger, and welcome! 👋😊

I'm Rasmus Bååth, data scientist, engineering manager, father, husband, tinkerer,

tweaker, coffee brewer, tea steeper, and, occasionally, publisher of stuff I find

interesting down below👇

In the

last post I showed how to use Laplace approximation to quickly (but dirtily) approximate the posterior distribution of a Bayesian model coded in R. This is just a short follow up where I show how to use

importance sampling as an easy method to shape up the Laplace approximation in order to approximate the true posterior much better. But first, what is importance sampling?

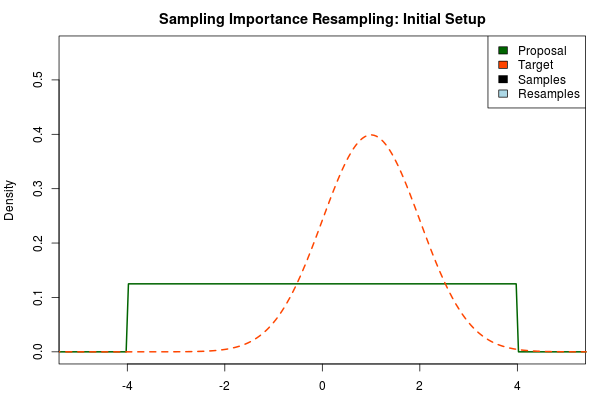

Importance Sampling

Importance sampling is a method that can be used to estimate the integral of a function even though we only have access to the density of that function at any given point. Using a further resampling step, this method can be used to produce samples that are (approximately) distributed according to the density of the given function. In Bayesian statistics this is what we often want to do with a function that describes the density of the posterior distribution. In the case when there is more than one free parameter the posterior will be multidimensional and there is nothing stopping importance sampling from working in many dimensions. Below I will only visualize the one dimensional case, however.

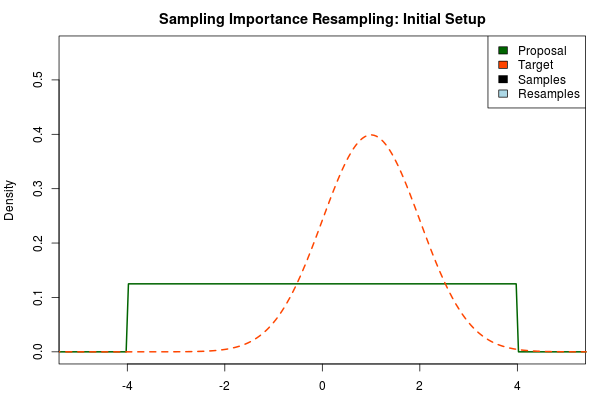

The first step in the importance sampling scheme is to define a proposal distribution that we believe covers most of our target distribution, the distribution that we actually want to estimate. Ideally we would want to choose a proposal distribution that is the same as the target distribution, but if actually could do this we would already be able to sample from the target distribution and importance sampling would be unnecessary. Instead we can make an educated guess. A sloppy guess is to use a uniform proposal distribution that we believe covers most of the density of the target distribution. For example, assuming the target distribution has most of its density in $[-4,4]$ we could setup the importance sampling as:

Here, the target distribution is actually a $\text{Normal}(\mu=1,\sigma=1)$ distribution, but most often we do not know its shape.

Thank you for tuning in! In this post, a continuation of

Three Ways to Run Bayesian Models in R, I will:

- Handwave an explanation of the Laplace Approximation, a fast and (hopefully not too) dirty method to approximate the posterior of a Bayesian model.

- Show that it is super easy to do Laplace approximation in R, basically four lines of code.

- Put together a small function that makes it even easier, if you just want this, scroll down to the bottom of the post.

But first a picture of

the man himself:

I argued

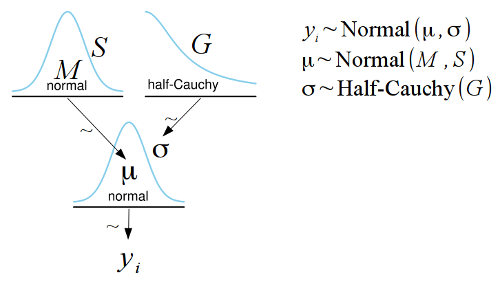

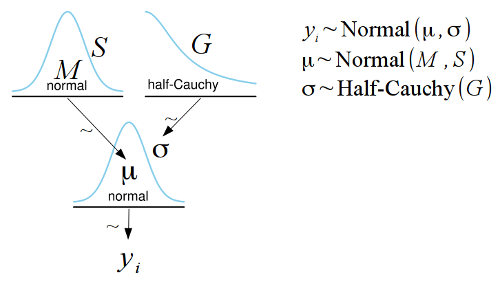

recently that a good way of communicating statistical models graphically was by using the convention devised by John K. Kruschke in his book

Doing Bayesian Data Analysis. John Kruschke describes these diagrams in more detail on his blog:

here,

here and

here. While I believe these kinds of diagrams are great in many ways there is a problem in that they are quite tricky to make. That is, until now!

I have put together a template for the free and open source

LibreOffice Draw which makes it simple to construct Kruschke style diagrams such as the one below:

I’m often irritated by that when a statistical method is explained, such as linear regression, it is often characterized by how it can be calculated rather than by what model is assumed and fitted. A typical example of this is that linear regression is often described as a method that uses

ordinary least squares to calculate the best fitting line to some data (for example, see

here,

here and

here).

From my perspective ordinary least squares is better seen as the computational method used to fit a linear model, assuming normally distributed residuals, rather than being what you actually do when fitting a linear regression. That is, ordinary least squares is one of many computational methods, such as gradient descent or simulated annealing, used to find the maximum likelihood estimates of linear models with normal residuals. By characterizing linear regression as a calculation to minimize the squared errors you hide the fact that linear regression actually assumes a model that is simple and easy to understand. I wish it was more common to write out the full probability model that is assumed when describing a statistical procedure as it would make it easier to quickly grasp the assumptions of the procedure and facilitate comparison between different procedures.

But how to write out the full probability model? In this post I’m going to show two different textual conventions and two different graphical conventions for communicating probability models. (Warning, personal opinions ahead!)

In the

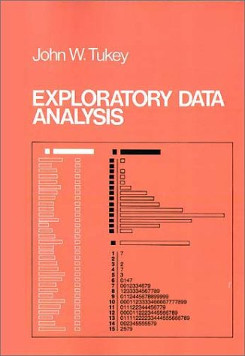

last post I described flogs, a useful transform on proportions data introduced by

John Tukey in his

Exploratory Data Analysis. Flogging a proportion (such as, two out of three computers were Macs) consisted of two steps: first we “started” the proportion by adding 1/6 to each of the counts and then we “folded” it using what was basically a rescaled log odds transform. So for the proportion two out of three computers were Macs we would first add 1/6 to both the Mac and non-Mac counts resulting in the “started” proportion (2 + 1/6) / (2 + 1/6 + 1 + 1/6) = 0.65. Then we would take this proportion and transform it to the log odds scale.

The last log odds transform is fine, I’ll buy that, but what are we really doing when we are “starting” the proportions? And why start them by the “magic” number 1/6? Maybe John Tukey has the answer? From page 496 in Tukey’s EDA:

The desirability of treating “none seen below” as something other than zero is less clear, but also important. Here practice has varied, and a number of different usages exist, some with excuses for being and others without. The one we recommend does have an excuse but since this excuse (i) is indirect and (ii) involves more sophisticated considerations, we shall say no more about it. What we recommend is adding 1/6 to all […] counts, thus “starting” them.

Not particularly helpful… I don’t know what John Tukey’s original reason was (If you know, please tell me in the comments bellow!) but yesterday I figured out a reason that I’m happy with. Turns out that starting proportions by adding 1/6 to the counts is an approximation to the median of the posterior probability of the $\theta$ parameter of a

Bernouli distribution when using Jeffrey’s prior on $\theta$. I’ll show you in a minute why this is the case but first I just want to point out that intuitively this is roughly what we would want to get when “starting” proportions.

Fractions and proportions can be difficult to plot nicely for a number of reasons:

- If the proportions are based on small counts (e.g., two of his three computing devices were Apple products) then the calculated proportions will only take on a number of discrete values.

- Depending on what you have measured there might be many proportions close to the 0 % and 100 % edges of the scale (Five of his five computing devices were non-Apple products).

- There is no difference made between a proportion that is the result of few counts (33 %, one out of three, were Apple products) and large counts (33 %, three out of nine, were Apple products).

Especially irritating is that the first two of these problems results in overplotting that makes it hard to see what is going on in the data. So how to plot proportions without running into these problems? John Tukey to the rescue!

Just came home from the

14th Rhythm Perception and Production Workshop, this time taking place at the University of Birmingham, UK. Like the last time in Leipzig there were lots of great talks and much food for thought. Some highlights, from my personal perspective:

- Bayesian perception of isochronous rhythms presented by

Darren Rhodes and Massimiliano Di Luca. Interesting idea and nice to see a fellow Bayesian.

- Slowness in music performance and perception: An analysis of timing in Feldman’s “Last Pieces” presented by

Dirk Moelants. Fun talk that dealt with how “slow” was interpred by performers of Feldman’s “Last Pieces”. Here is a not too slow rendition of some

“Last Pieces” on youtube.

- Parameter estimation of sensorimotor synchronization models presented by

Nori Jacoby, Peter Keller and Bruno Repp. Technical and interesting talk and I believe they have some papers out on this already.

- Two interesting talk on resonance models and EEG: Scrutinizing subjective rhythmization: A combined ERP/oscillatory approach by

Christian Obermeier and Sonja A. Kotz, and From Sounds to Movement and Back: How Movement Shapes Neural Representation of Beat and Meter presented by

Sylvie Nozaradan, Isabelle Peretz, André Mouraux

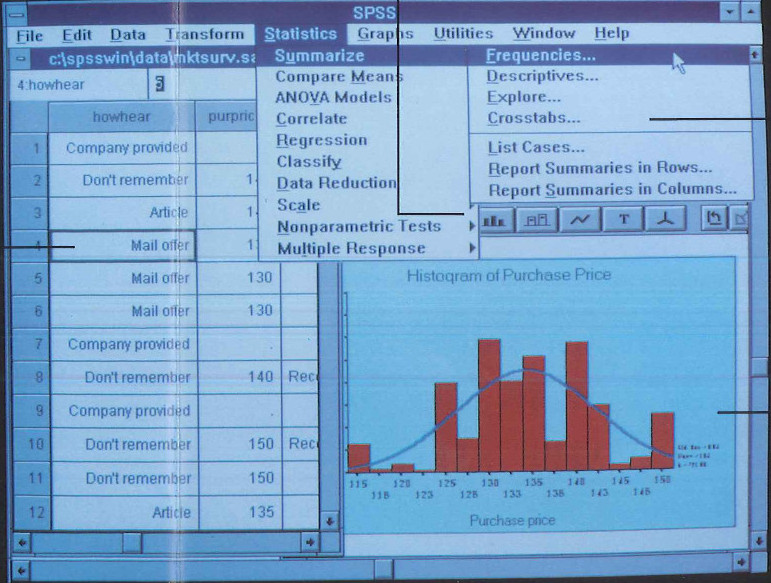

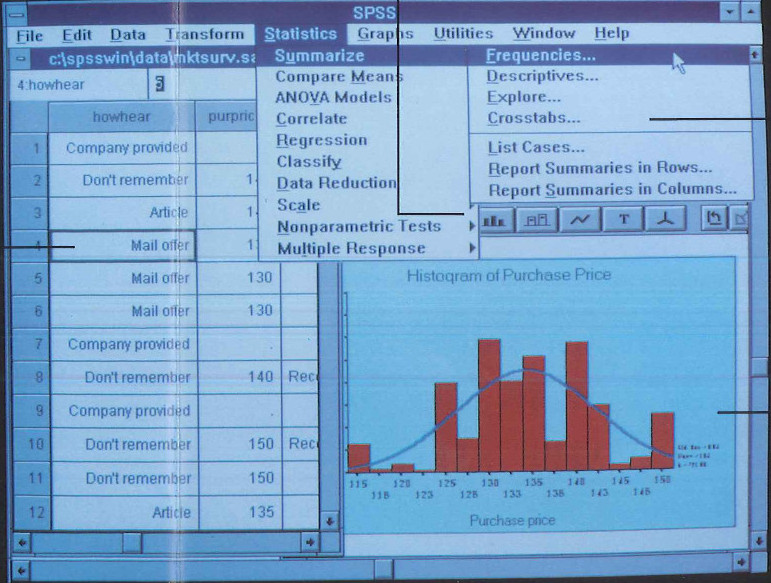

For some reason someone dropped a pamphlet advertising SPSS for Windows 3.0 in my mail box at work. This means that the pamphlet, and the advertised version of SPSS, should be at least 20 years old! These days I’m happily using R for everything but if I was going to estimate any models 20 years ago SPSS actually looked quite OK. In the early 90s my interests in computing were more related to making Italian plumbers rescue princesses than estimating regression models.

The pamphlet is quite fun to read, there are many things in it that feels really out of date and which shows how much have happened in computing in the last 20 years. Let me show you some of the highlights!

Here is a picture from the pamphlet of an SPSS session in action:

Actually it still looks very similar to the SPSS I saw my colleague use yesterday, so maybe not that much have changed in 20 years… Well let’s look at the hardware requirements:

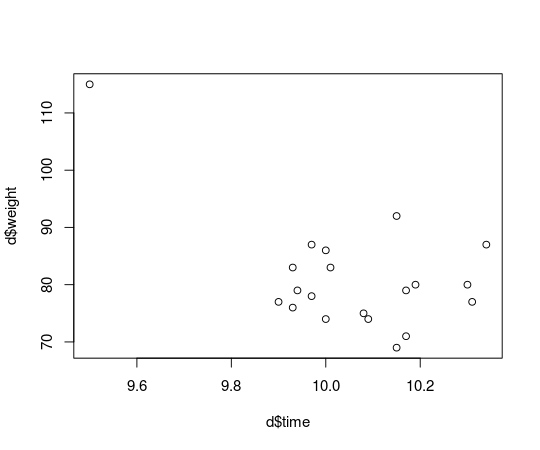

So in

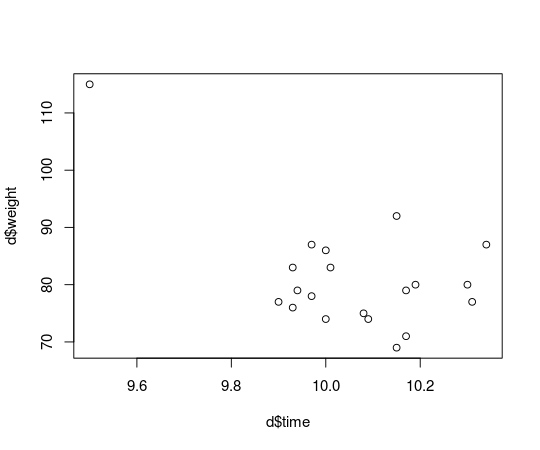

the last post I showed how to run the Bayesian counterpart of Pearson’s correlation test by estimating the parameters of a bivariate normal distribution. A problem with assuming normality is that the normal distribution isn’t robust against outliers. Let’s see what happens if we take the data from

the last post with the finishing times and weights of the runners in the men’s 100 m semi-finals in the 2013 World Championships in Athletics and introduce an outlier. This is how the original data looks:

## runner time weight

## 1 Usain Bolt 9.92 94

## 2 Justin Gatlin 9.94 79

## 3 Nesta Carter 9.97 78

## 4 Kemar Bailey-Cole 9.93 83

## 5 Nickel Ashmeade 9.90 77

## 6 Mike Rodgers 9.93 76

## 7 Christophe Lemaitre 10.00 74

## 8 James Dasaolu 9.97 87

## 9 Zhang Peimeng 10.00 86

## 10 Jimmy Vicaut 10.01 83

## 11 Keston Bledman 10.08 75

## 12 Churandy Martina 10.09 74

## 13 Dwain Chambers 10.15 92

## 14 Jason Rogers 10.15 69

## 15 Antoine Adams 10.17 79

## 16 Anaso Jobodwana 10.17 71

## 17 Richard Thompson 10.19 80

## 18 Gavin Smellie 10.30 80

## 19 Ramon Gittens 10.31 77

## 20 Harry Aikines-Aryeetey 10.34 87

What if Usain Bolt had eaten mutant cheese burgers both gaining in weight and ability to run faster?

d[d$runner == "Usain Bolt", c("time", "weight")] <- c(9.5, 115)

plot(d$time, d$weight)

I recently had a lot of fun coding up a Bayesian Poisson model of football scores in the Spanish la Liga. I describe the model, implemented using R and JAGS, in these posts: part 1, part 2, part 3. Turns out that Gianluca Baio and Marta Blangiardo already developed a similar Bayesian model with some extra bells and whistles. For example, they modeled both the defense skill and and offense skill of each team while I used an overreaching skill for each team.